The All-Knowing Father

What chatGPT has given us is a new interface to knowledge. For the first time we have a way of interacting with the whole of it, rather than its parts. This is a gift, the real dangers are within us.

0. What is this essay about?

We call machines " intelligent ", but we are not sure what the word means. We marvel at chatGPT's "intelligence," but most of us have no clue how it really works. However, we’re quick to sign petitions because we’re afraid AI will kill us. But looking carefully at this, we can quickly discover that AI isn't as intelligent as we think it to be. Although any AI is capable of operations that we associate with intelligence, it lacks autonomous will or any form of consciousness, and more importantly, it lacks any form of understanding. What Large Language Models brought to light is a new quality of human knowledge, not some demonic hidden intelligence in the machines. LLMs are a "smart interface" to knowledge more than they are intelligence in and of themselves. We are not dealing with an "intelligent" machine, but rather with a new kind of powerful interface to knowledge. We've always used intelligent interfaces to connect to computers, and we've been dreaming of a natural language interface for 70 years. Since the advent of the Internet, there has been steady progress towards this goal. The difference with LLMs is that by them we can see and interrogate human knowledge through a new synchronous interface, connected to the whole and not to some parts. To fear an interface is absurd. The current public discussion about AI is wrongly skewed to focus on the dangers of illusory AI autonomy, while the real danger is in projecting upon it something it's not - a power without limits. The obsession with safetyism, magnified into mass paranoia during COVID, brought us here: we're afraid of our own shadow, so we see it everywhere. As an antidote to this shadow, we're peddled a new religion, Scientism, that will give us back everything we love, including life without the pain of work and eternal life in the cloud, while at the same time threatening to wipe us from existence. The great danger of this discourse is that we will project onto AI the image of the new god of scientism, "The All-Knowing Father". Those signing letters to stop research on AI are just reinforcing this projection. Therefore, understanding the true nature of AI is not a theoretical, speculative question. It's a vital one.

Late edit: At the time of the first draft, there was only the initial letter from the Future of Life Institute, calling for "a pause of at least 6 months in the training of AI systems more powerful than GPT-4", signed by Musk and other luminaries of the tech world. This was a strange move for the reasons made clear in the text below, but at least they were trying to make an argument. However, something called the "Center for AI Safety" released another statement today, consisting of a single, bizarre paragraph signed by many scientists and public figures, including Sam Altman. No argument at all, only this:

This sounds really weird (societal risks? pandemics? nuclear wars?) and made me publish this essay earlier than I wanted to, maybe people will understand what we are really talking about in the case of LLM, which is what chatGPT is, no matter what version it is. It's my belief that we are being scared into some sort of regulation that will severely limit access to AI, except for those "designated" as "safe users" by governments and techno-elites. The stakes are high because AI gives unprecedented access to knowledge, and issues of copyright and money may be central to these initiatives to regulate the field. It's no coincidence that pandemics and nuclear wars are being brought into the mix; they need to be so scary that no one asks questions. To counter this, we really need to start using our heads.

1. "Intelligence" and knowledge

It is remarkable how certain we are that AI systems are intelligent, given the difficulty we have in agreeing on a definition of intelligence. This word has been so abused in marketing of new technologies lately1 that anyone worried about catastrophic AI scenarios must first ask a simple question: before artificiality, what is intelligence? Can we agree on a clear definition? Intelligence has one of the most polymorphic definitions of all words, usually referring to the ability to learn and understand abstract relationships between objects in the world. It seems that "intelligence" is defined as what we need to find the answer to the questions "how?" or "why?" using already integrated causal structures, previously learned. In chatGPT's own words:

But instead of splitting hairs, as chatGPT seems to do, we can look at the definition of intelligence in a slightly different way: in terms of knowledge. Any kind of knowledge. There's a vast body of knowledge we're immersed in, abstracted by the human race over millennia in what we call "culture" in the broadest sense, in addition to the information that comes to us in real time through our senses. All the art, science, myths, stories, etc. - whatever humanity has ever created - it's written, drawn, and stored somewhere in words or images. We are educated by it, we live in it, it grows us, we grow it.

"Knowledge" is for us a kind of "library" that we can turn to when we need to understand something new. It's a static vision: knowledge is inert, "trapped" in books or databases or other people's minds, and only comes alive in our minds when we use it. One of the main points of this essay is that we're wrong: knowledge has always been dynamic, has a hidden structure, and changes as it interacts with us. It's shaped by us, but it also shapes us. It is both our child and our parent. Humanity is a kind of Simbiot, formed by the human population and its knowledge, which is a womb in which civilization grows. We are both the product and the source of this vast knowledge, and whatever our hereditary abilities, without it we turn into savages losing most of what defines us as human. Knowledge is, in the end, the reservoir of our humanity, connecting generations to what makes them truly human and preserving their continuity over time. We didn't realize this until now because our interaction with knowledge has been discrete and asynchronous - like finding a book, reading it, searching for what you're looking for, absorbing the knowledge, putting the book back on the shelf. A discrete, discontinuous operation that seemingly leaves the knowledge unchanged. Until now.

We can view all the information coming through our senses as the direct, time- and space-bound connection to reality, while we can see knowledge as a connection to reality that transcends space and time, connecting our minds to times and places we'll never experience by our senses.

But what about intelligence? On a personal level, being intelligent is simply the ability to make the best use of this vast human knowledge2 out there for a particular purpose. Intelligence is about the quality of one's connection to that body of human knowledge, that is, the ability to make the best use of knowledge as a tool for one's purposes. And, boy, is there knowledge everywhere! Whatever you want to do, it seems easier than ever to do it. The Internet has truly become "The Human Library of All Knowledge", decades before AI became the hot topic. It looks like you can learn quantum physics, the intricacies of political strategy, how to grow asparagus, or even how to build AI models, all you need is the determination and some elusive ability to do it (we know from experience that some people are better at it than others). What's different in our time from, say, Kant's, is the lower barrier to knowledge and the shorter time lag in acquiring knowledge. But once you have access to all the information you need, there is now, as there was then, that elusive ability to make the best use of it - to find relevant bits, discard others, integrate them, test them, and so on. - A set of operations that really makes the difference in how knowledge is used by individuals. Some call it "smartness," others "brightness," but I think that's what we really mean by ”intelligence".

So I propose that intelligence, in human terms, is defined as the ability to make the best use of existing human knowledge for a specific purpose. The purpose can be to understand something, but it can also be quite practical, like finding the fastest way to a destination, fixing a broken bicycle, or even a highly complex one, like building a spaceship. Those who do it better and faster are the ones we call " more intelligent"3. This definition has the advantage of being universal, we do not need to define an endless list of "intelligence types". The thing to keep in mind is that intelligence exists only in relation to a task or purpose - i.e. in relation to someone's will - not in itself. There is no intelligence in the knowledge itself, intelligence can manifest itself only when someone uses that knowledge for a specific purpose.

2. Language and knowledge, part one: the big picture

The basic function of human language4 is to provide an abstraction of reality and personal experience - its objects, their properties, their interaction, their value, to the individual. ("object" is used here in its most general, abstract sense; it can be a thing, but also a concept or a vision). This abstraction layer is needed so that humans can share and construct their perceptions of reality. When two people talk about a dog, they need to name it in a coherent way5. In fact, the most basic role of language is to provide coherent definitions of perceptions of reality for a group. At first, perhaps mostly for practical reasons; but as abstraction grew with humanity, also for a lot of non-immediately practical reasons - such as poetry and philosophy - which also refer to the quality of perceptions rather than the perceptions themselves.

Language is the structural matrix of knowledge, as we call "knowledge" all the stored language sequences that describe properties, relations, causalities, values of some objects of reality. In this respect, language has two decisive roles:

- it is a system for storing knowledge

- It's the means of communication to modify, improve, grow and spread knowledge.

Until now, these two roles have been completely separated: once knowledge is stored (in books, pictures, and all sorts of recordings), it is "dormant" and inert. When it becomes the subject of discussion, it changes and flows in mostly unpredictable ways, under the action of the human mind and intuition (the essential agent of change), but also changing the human mind in the process.

The human mind was the only "theater" where "stored" knowledge came to life. One way to look at what Large Language Models (LLMs, of which chatGPT is the most famous) do, is to see them as a new theater where knowledge comes alive without a mind. It may very well be that all this stored knowledge needed was a new type of interface, one that allows us to do more than just retrieve a list of "inert" knowledge (like a Google search results list), by using how bits of knowledge are connected to each other and mashing them up based on the relationship among them.

Bits of knowledge are actually representations of bits of reality, with their objects, relationships, properties, values, etc.; you can think of knowledge as a network of relationships between representations and properties attributed to objects.

Take this sentence: "you can think of knowledge as a network of relationships between representations and properties attributed to objects" Every single word has a meaning, even parts of it - like "know" from "knowing". Each word is either a representation of an object of reality (say "you"), or a functional part relating properties to objects (say "meaning"), or a description of some kind of dynamic activity or relationship with some other words (like all verbs). This is what we call the grammar of a language, and it gives us the way by which this sum of bits of meaning gets a larger overall meaning. Grammar is much more than a set of rules, it’s the inherent order that gives the whole a greater meaning than the parts, it's the connecting lines between the nodes (words) in our language, creating meaning and, in the end, the "web" of knowledge.

Now, grammar is an abstract set of rules about how words relate to each other that we either learn specifically or get intuitively when we learn a new language. The point is that these grammar rules are present in all expressions of a language, even if you have never formally learnt any of them (as most of the people today). It's not something we consciously apply to the language, it's in the language itself - and that's why we use them correctly even if we don't know anything about them. Since knowledge grows in the matrix of language, these rules determine the meaning of every piece of knowledge out there. This is the hidden order in the vast tangle of words out there.

The point is that language is not an instruction set on which we build knowledge, its "inner workings" are the DNA of knowledge itself. There is no way to separate knowledge from language, and given the limited number of words, it should be clear that knowledge is to be found in how the words are related and arranged to each other (their "network"), their relative "distance", and not in the words themselves. Things are more complex, and we'll get into that later, but for now we just need to keep this in mind: language is the mainframe of knowledge.

To really understand why LLM's are just a new interface to knowledge, the ultimate one, we first need to look more closely at the evolution of our relationship to knowledge, i.e. the "old" interfaces.

3. Ingesting and digesting knowledge

How do we find and use the knowledge out there? Using a "food" metaphor - our minds feed on knowledge, hence the popular "food for thought" - we can see that there are two distinct phases whenever we tackle a new problem that requires new knowledge:

1. ingesting knowledge -it is not a "dumb" process, it requires discriminating intelligence, because not everything out there is "edible" or suitable for later "digestion". You need to know how to find your sources, assess their value, extract relevant information from them, and then store all this primary knowledge in a proper, easy-to-use system for later on. In a sense, you are "cooking" the raw knowledge you have gathered into a palatable, nutritious meal you will eat. Given the staggering amount of knowledge out there on virtually every subject, this is a serious and difficult task, much more important than it looks.

2. digesting knowledge -this is when you turn what you have absorbed into internal knowledge, in your own formulation, for your own purposes. This is where personal intelligence is hard at work, integrating new knowledge into existing knowledge and drawing practical conclusions from it. You do this by playing with the new knowledge, reformulating it in your own terms, and then integrating it with the existing knowledge in your mind. In short, this is "classical" learning.

It used to be quite difficult to "ingest" knowledge because you had to have special access to it (be a student or be part of a guild). That knowledge was in libraries or in some people's heads, it could take years and it was relatively hard to get; there was a high barrier to just accessing knowledge. There was no interface to knowledge, just groups of people "guarding" it. Today, it's on the Internet and seems to be available to everyone. But this "availability" is a rather tricky issue, because anything that is easy to get may be of dubious value.

4. Knowledge as library: solving the "finding" problem in the Internet era. The rise of the machines.

The Internet, now the nervous system of human culture, didn't quite live up to expectations at first. Besides putting most information just a few clicks away, it also brought with it a host of problems. How it dehumanizes us, or how it is used to destroy our freedom, may be the subject of another essay (there are plenty of them out there anyway6). What we are really interested in is the deeper reason why the Internet has failed us from the start: solving the "access" problem didn't really solve the "finding" problem. It actually made it harder. Having everything connected and accessible soon became like having access to the biggest library in the world, where all the books were mixed up; without a clear plan of the premises, it was almost impossible to find anything. I remember the times in the early 90s when the Internet came to Romania with 28 kbps modem connections; despite its terrible slowness, that early Internet was a real ADHD machine: you started looking for something like an interview with Bill Bernbach, you didn't find it, but from link to link you found yourself, many hours later, reading about medieval English literature, something you never wanted to do. It was fascinating, mesmerizing, and completely useless. You almost never found what you were looking for. Hyperlinks, which were supposed to connect similar content, created a mirage: you were literally twenty clicks away from any site, but you couldn't find anything. Gone were the days when, however hard it might be to get to, at least you knew knowledge was somewhere. Now it was easy to get to, but you didn't know where to find it. We were lost in a maze of our own making.

The first solution to this frustrating problem was the old office structure of "directories," which was recycled into computer metaphors with the advent of the PC. Some of us remember that the Internet giant of the '90s, Yahoo, was essentially a giant curated directory of websites, neatly organized into categories. That's how you found what you were looking for. Although anyone could submit a site, you were at the mercy of the curators because the hierarchy was set by humans. Either you knew exactly what you were looking for, or you wasted an insane amount of time looking at hundreds of sites in a category to find what you needed. This was when the "hierarchy/relevance problem" became acute: how do you easily find what you need?

Then came Alta Vista, the first real search engine7, which used "robot crawlers" to jump from page to page, indexing the textual content of all the sites it could find, insanely fast (as Steve Jobs would say). Suddenly things looked a lot easier. You could just search for "growing tomatoes" and all the sites containing those words would be neatly listed. This is a first instance when some form of machine autonomy was managing knowledge for us - these pieces of software are "robots" and "they crawl" the web for us, on their own. But there was still a problem of hierarchy: many results were of poor quality, and in most cases the listing algorithms were easy to manipulate for commercial advantage. And there was also a problem of relevance: the results were dumb, the engine didn't understand the relationship between the words in your queries, so it mixed up all kinds of crops with all kinds of tomato-based products. It was slightly better than directories, but given the exponential growth of new sites and pages, and the "dumbness" of the listing algorithms, the "hierarchy/relevance" problem was exacerbated.

The next step was the ingenious idea of a ranking algorithm based on "links pointing to", a step that greatly increased relevance - and thus Google was born. Google was the first real breakthrough in the hierarchy/relevance problem, one that allowed us to use the Internet transparently at its true value. The real revolution was hidden in a simple idea - an algorithm that used not some hierarchical values assigned by humans, but some intrinsic organic qualities of the pages themselves, developed naturally by the users who build the content and reference it: the number of links pointing to the page. This initial intuition later led to a full-fledged network theory, but what is important here is not the detailed theoretical considerations, but the radical new approach: let the machines find the intrinsic structure of the crazy network of links, assigning a kind of "weight", similar to "importance", to each page, without any human intervention, because humans are slow and have hidden agendas. So that the "crawling robots" were not just chasing words to create huge indexes, but instead were harvesting a wide range of meta-textual parameters from each page to determine the relevance of the content. We have become so accustomed to this that we do not realize that since Google we have been living in a completely "machine-based" way of structuring information. Our interface with knowledge is no longer direct.

The advances in Goggle's Page Rank (PR) algorithm have been spectacular, we could soon ask questions and get back a lot of relevant web pages on that specific question. This worked best for what we call information - bits of very specific knowledge - but less well for knowledge, with its inherent complexity. For now, the thing to keep in mind is that to solve the "finding" problem, we used machines with newly invented algorithms that dig into the intrinsic meta-structure of the network at enormous speed to find relevance. And these algorithms (like Google PR) used completely new qualities of content than before the computer age. We have been living in a machine-driven, machine-interfaced knowledge environment for decades without realizing it. Without machines, we are already unable to properly interface with the vast amount of knowledge stored on the Web. In fact, there is no other way to effectively find something in this vast tangle of content except to use a machine-based interface that does a lot of selection and operations on the raw content before serving it up to us.

5. The old dream: language as an interface

Since the first day of computers, one of their main tasks, besides calculating things, has been to structure and manipulate an ever increasing amount of knowledge & information so that we can make better use of it or build more powerful and faster machines. At first using skeuomorphic metaphors that mimicked the real world ("folders" on the "desktop"), but soon in highly technical and novel ways. Dealing with huge amounts of information and knowledge can only be done by using machines to structure the information for us8. But when we use machines, we have to develop "interfaces. This is what an interface is: a control panel for us to give commands and for machines to give feedback. This is more general than your screen, operating system, keyboard and mouse, even your car dashboard with its steering wheel is an interface. Every machine or piece of software needs an interface to be used by humans. It may be that human-machine interfaces are an under-appreciated field of study that deserves much more attention than it gets. But for the purposes of this text, there is something else of importance in their evolution.

From the first clumsy and time-consuming perforated cards to the first attempts to search the Internet, there is only one Holy Grail: the natural language interface. "2001: A Space Odyssey," Kubrick's 1968 masterpiece, owes much of its enduring fascination to HAL, the supercomputer whose only interface is natural language. Since 1968, we have thought of natural language as the ultimate way to communicate with a computer. Beyond HAL, think of the impact the first Macintosh had when it spoke to its audience. Then Siri, Alexa, and all the "assistants" out there. We always wanted this, and companies invested like crazy to make it happen because they knew it was the number one selling point for a device: the ability to give commands in natural language9.

And that's exactly what we've seen in the evolution of search engines, a migration from "precise word queries" - where it's critical to ask very precise things - to relevant answers to "fuzzy" natural language questions - where sometimes we don't even know very well what we're looking for. Progress has been incremental, and we have gradually gotten used to each new innovation, not realizing that a simple Google search is now radically more effective in natural language than we ever dreamed of 20 years ago. Natural language as an interface to a machine" is not something that started with LLMs. It started mostly with search engines, where the not-so-new field of Natural Language Processing found its main application.

6. A short history of Natural Language Processing (NLP) before chatGPT. NLP in search engines

NLP started as a computer domain around 1950, on a ”ruled based approach” and mainly focusing on translation. There was a constant effort to ”translate” human language in machine language (basically numbers), and here are some of the milestones in different decades:

1950s-1960s: The Birth of NLP

The Georgetown-IBM experiment in 1954 marked the first demonstration of machine translation, translating Russian sentences into English.

In the 1966, researchers developed ELIZA , an early chatbot that simulated human conversation using pattern matching techniques.10

1980s-1990s: Statistical Methods and Corpus Linguistics

Statistical approaches gained prominence, driven by the availability of large text corpora and computational power.

Hidden Markov Models (HMMs) were widely used for speech recognition.

The 1990s saw the rise of corpus linguistics and the development of annotated corpora for training and evaluating NLP systems.

2000s: Rise of Machine Learning

The use of machine learning techniques, such as Support Vector Machines (SVMs) and Conditional Random Fields (CRFs), gained traction in NLP tasks like named entity recognition and part-of-speech tagging.

Statistical machine translation, driven by phrase-based models and alignment techniques, made notable progress.

2010s: Deep Learning and Neural Networks

Deep learning, particularly recurrent neural networks (RNNs) and convolutional neural networks (CNNs), revolutionized NLP.

Word embeddings, such as Word2Vec and GloVe, captured semantic relationships between words.

Neural machine translation, with the introduction of the attention mechanism, led to significant improvements in translation quality.

Transformer models, like the Transformer architecture, brought attention mechanisms to the forefront, achieving state-of-the-art results in multiple NLP tasks.

2018-2020: Pre-trained Language Models

Pre-trained language models, such as OpenAI's GPT (Generative Pre-trained Transformer) and Google's BERT (Bidirectional Encoder Representations from Transformers), emerged as powerful tools.

These models, trained on vast amounts of text data, demonstrated exceptional performance in various NLP tasks through fine-tuning on specific datasets.

NLP is actually a fairly mature field of computer science, built on many theoretical advances over the past few decades, all of which have made possible the breakthrough in LLMs we have seen in the past few years. ChatGPT or any other LLM out there is not the result of a single innovation or a single new theory. They may look like a "singularity" because of the amount of attention they got overnight, but they are really just the tip of the iceberg that is NLP.

It should be noted that search engines like Google have been using a combination of Natural Language Processing (NLP) techniques to provide relevant search results to users long before chatGPT or other LLMs. While the exact algorithms and methods used by search engines are proprietary and constantly evolving, here are some key NLP techniques that have been used by search engines since long before LLMs came to prominence:

Query Understanding: Search engines employ techniques to understand the user's search query. This involves tokenization, lemmatization, and part-of-speech tagging to extract the key terms and their meanings. Stop-word removal and stemming may also be applied to improve query understanding.

Information Retrieval: Search engines utilize NLP techniques to retrieve relevant documents or web pages from their indexes. This involves indexing and storing documents efficiently to enable fast retrieval based on query terms. Term frequency-inverse document frequency (TF-IDF) is a commonly used technique to determine the relevance of documents based on the query terms.

Named Entity Recognition (NER): NER is used to identify and categorize named entities, such as names of people, organizations, locations, and other entities, in the search query or the indexed documents. NER helps in understanding the context and can improve search result relevance.

Synonym Detection: Search engines employ techniques to identify synonyms or related terms to expand the query's scope and improve the search results. This may involve leveraging lexical databases or word embeddings to find semantically similar terms.

Query Expansion: Search engines may expand the user's query by including additional terms or synonyms to enhance search results. This can be achieved using techniques like query reformulation, word embeddings, or leveraging external resources, such as WordNet or Knowledge Graphs.

Natural Language Understanding (NLU): NLU techniques are employed to understand the intent behind user queries and provide more accurate results. This can involve techniques like intent classification, sentiment analysis, or language modeling to better understand the user's search intent.

Relevance Ranking: Search engines employ various algorithms to rank search results based on their relevance to the user's query. These algorithms take into account factors like keyword matching, document quality, user feedback, and relevance signals from various sources to determine the ranking order.

It is almost an unknown fact that Google uses such a large array of NLP techniques.

7. Information is not knowledge

So this is where we were a few years ago, before chatGPT took us by storm: each of us is able to connect to this immense body of knowledge - built over centuries by all of humanity and mostly digitized in the last few decades - using machines with software that incorporates many NLP advances to provide us with natural language interfaces. These "invisible" machines behind every search engine allow us to find and make the best use of knowledge according to our inclinations and talents. The knowledge is there; our ability to use it is "intelligence". While Google (or any search engine as an interface to digitized human knowledge) has made it relatively easy to find information, it is still difficult to become very good at anything based on the Internet alone; on average, we are clearly more informed and know much more than our parents did, thanks to the Internet. But there is also an ocean of half-knowledge and superficial information presented as profound knowledge or revelation. We are choking on poor quality content - everyone is now a "content creator", including those who should never create any! Using this true "Human Library of All Knowledge" is still a hard task, it takes a lot of determination, time and skill; that is, if you're looking for knowledge and not information. One of the reasons for the difficulty of finding the knowledge behind the information is that it's really hard to teach yourself, alone, without a teacher. Without someone to guide you, the knowledge you are looking for is hidden in a mountain of information (Google or any other knowledge interface retrieved for you). You need someone with a "treasure map" to point out the relevant locations of the pieces of knowledge you seek, and then help you "unlock" them.

There is a somewhat subtle distinction between "knowing" and " knowledge". "Knowing" is basically being in possession of some specific information, while "knowledge" is a corpus of theories, facts, and information related to a specific subject. Thus, a "knowledgeable" person is not the same as a person who "knows" things. To "know" things is to have information, to be "informed", and for that you can successfully use Google. To absorb knowledge, to become knowledgeable, you need more.

In fact, finding the right knowledge and then transforming it into understanding is a complicated process in which teachers play a crucial role. The teacher has the experience of others learning what he teaches, their difficulties, typical mistakes, misunderstandings. A teacher can give you the right books to read, and that is no small thing; without one, you end up reading a lot of useless or even misleading content, wasting time reinventing the wheel, or worse, taking mistakes as truth. The teacher has the map, while you only see what's in front of you. You can still reach your destination without one, but you won't be able to avoid the dangers and you'll take unnecessary detours; most of the time you'll just get lost. That was one of the biggest illusions Google and its smart algorithms and NLP techniques created for us: that being able to find everything would enable us to understand everything. Having a good interface to knowledge is only half of the solution. We took information for knowledge. But to get to knowledge, we still need something like a teacher, something a search engine cannot be. Information is not knowledge.

To overcome this limitation, people have been returning to human curation since the early days of search engines (remember Ask Jeeves?), empowered by the speed of new collaborative applications. Thirty years later, two ideas have stood the test of time. First, a kind of open source encyclopedia, collaboratively written and based on the assumption, usually correct, that many people correcting each other will arrive at some form of truth. This is Wikipedia, which was built from the ground up on a conversational model, albeit a highly asynchronous one - with large time gaps between corrections. Then a place where you can ask other people questions and have near-synchronous conversations about your questions. This is what Quora is. Its success is largely based on the main limitation of Google as a learning tool: its complete lack of feedback, personalized recommendations, and the ability to support conversations around very specific knowledge. That is, finding knowledge, not information. On Quora, you can ask a question and soon an expert will answer it! Or not.

8. From searching to finding - the "finding engine".

This has been a trend in almost all computer technology since its inception: to help people take in more information, or information that is more relevant to their goals and tasks, with an invisible effort to make the leap from information to knowledge itself. And there has been only one ultimate goal for interfaces since computers were created: to use them in a conversational way, in natural language.

If we think of intelligence as we have defined it, as "the ability to find and use existing knowledge to solve a specific task", it becomes clearer why Large Language Models (LLMs), of which chatGPT is the most famous today, look so "intelligent". They're just interfaces, but they actually take over part of the teacher role and solve part of the problem we used to need intelligence for - finding the right knowledge. The most impressive thing about them is their ability to very quickly deliver any kind of knowledge from "The Human Library of All Knowledge" (or, for the time being, from some well-defined training sets), in a completely readable and conversational way, exactly to your question; they do not deliver information (lists of bits of knowledge), but knowledge, which is the most radical change compared to Google. They look intelligent (and scary) because they look like they are doing some of the things we define as "intelligence" - manipulating initial knowledge - on the fly. But they're not.

If you look closely, there's nothing functionally new here; it's the same function as the Google algorithm: getting us the answer to our natural language question from the vast Internet. What's new is that LLMs have taken the search step out of the process entirely. They provide a way for us to go straight to finding what we are looking for. An LLM is, in a sense, a "finding engine" and not a searching one. The quality of what it finds for us is another matter entirely; there is no guarantee that what it finds is true or even logical. (There is also, as with search engines, a critical point in the quality of your query. A poorly formulated query will only return poor results).

Many of us have literally freaked out after having conversations with chatGPT about more philosophical or psychological subjects, crossing the boundaries of what we call "decency", or showing clear signs of mental derangement. But we fail to understand that this was already in the training set11. These are just ways of structuring existing knowledge. If you ask it a tricky or inappropriate question, it will give you only the right answer: existing (tricky and inappropriate) knowledge that fits your question.

We have already pointed out that the "natural language interface" has been the most important technological dream since the early days of the PC. LLMs seem to do just that, completely taking over the "ingestion" phase, guiding us and engaging us in a real conversation about the topic of interest. But instead of connecting us to another machine-as any interface does-they connect us to knowledge itself. When we ask something, it's not the machine we're asking, it's knowledge itself. LLMs don't provide a list of raw information for you to absorb later, like Google, they simply do our job of searching, finding, selecting, organizing, and abstracting knowledge from an astonishing number of different sources. Even in the style you want. They extract knowledge from information, and they "cook" these raw knowledge "ingredients" on the fly, allowing us to skip the time-consuming search and preparation of the ingredients altogether. They don't produce search results, they simply find knowledge, they are "finding engines".

If we think of bits of knowledge as the raw ingredients on which our minds feed, then the most apt metaphor to describe the evolution of our interface to knowledge is this:

In the "Classical Age”, you had "markets" (libraries) with "ingredients" (books) and "cooking classes" (universities) where you learned how to best cook your ingredients so that your mind could easily feed on them.

In the "Google Age", you simply order your list of ingredients online (search for knowledge and download it) and consult with others online about how to cook them. It may not be the best meal, and it may take a lot of trial and error. But it works for simple things.

In the "LLM Era", you have a Star Trek "replicator" that materializes the perfectly cooked meal you want out of (seemingly) thin air. (The fact that what you want may be something disgusting and tasteless is another matter. You just get what you asked for, already cooked.)

But the bottom line is this: you still have to eat it and digest it for your mind to grow. No matter how spectacular, an interface is just an interface, not a mind thinking in our place.

9. The inner workings of LLMs: language and knowledge, part 2

Like Google's PR algorithm, but on a much deeper level, LLMs find relevant connections for the content they've been trained on, using some organic, intrinsic content qualities that proved to work during their training phase.

One thing that complicates understanding LLMs is the highly eclectic training sets they use. But let's say we have such a machine that only looks at sentences about cats. So the training set is limited both in terms of subject matter (only cats) and in terms of knowledge extension (only single sentences with the subject "cat", not convoluted phrases). But in order for any computer to be able to work with these words, we first need a way to turn them into numbers. How do we do that?

One way was the way of the early NLP techniques: using a "rule-based approach". Since grammar gives us the basic role (hierarchy) of each word in a sentence, one idea was to write a piece of software that allows a machine to assign the "subject" property to the word "cat" in any sentence, such as this one: "Cats are the masters of humans, and we are their slaves. By looking at all sentences with "cat" subjects, the machine can build up a kind of "virtual knowledge base" of properties of cats and other subjects interacting with them, without ever having to understand anything, just by using intrinsic grammar rules. The "rules-based approach" was the wrong way to go, because while you get some meaning back from the machine, there's a lot of back and forth in translating language into numbers, and a lot of meaning gets lost in that translation.

Someone later came up with the idea that if we look at all the sentences about cats, we can find an order of words based on the most likely word to follow a given sequence of words. Probabilities are numbers, the language of machines, and so we can build a machine with "knowledge" about cats that can use our initial "prompt" to "answer" coherently with relevant knowledge from its training set. In fact, the logic of "next most likely word after a series of words" has both the grammar and syntax of a language embedded in it, so we do not need to teach our machine any grammar rules. This is a ”statistical approach”12 and not a ruled based one. The main insight here is the one discussed earlier when looking at grammatical structures and the fact that meaning is not in the words, but in how they are arranged and related to each other.

The concept behind this is called "word embedding", and what it really means is that some sort of "distances" are calculated between words in a training set. The "axis" for these "distances" are types of relationships between words, not space as in real distances. In our example, we can look at the average closeness of a word to the ideas of "animal" or "human", or how far away it is from a "good feeling" perspective, and so on, but also how far apart they are in different sentences. For example, "tail" is usually far from "human-typical words" and closer to "cat". Picking these "dimensions" can be done manually, but it is much more effective to have specific algorithms determine them based on the words in the training set.

As you can guess, this is not a 3 dimensional space, chatGPT for example uses 4096 dimensions and each word in its training set is actually a vector in a 4096 dimensional space. If you think we're talking about training texts with billions of words, the numbers and scale of this is mind-boggling, but this is what computers are really good at: crunching numbers on a huge scale (in fact, the larger the scale, the better the result).

The result of this training set structuring operation is a really complex "map" of the network of words in a training set, with meaning encoded in probabilities and abstract distances between words and groups of words13. And this lays the groundwork for what AI's have always been best at: pattern recognition.

In the end, we train this machine by giving it feedback on its "answers", allowing it to fine-tune its internal algorithms looking for patterns in the "distances" between words, since this was a "machine learning" machine from the start. What we get is a machine with knowledge about cats, able to answer any questions (prompts) about cats, not by thinking, but by looking at relationships between words (tokens) in the training set, taking into account prior human feedback (training).

To get a clearer idea of the steps an LLM (like Chat GPT) goes through when you ask it something like "Are cats the overlords of humans?", here is its own answer:

Understanding the Question: I analyze the structure and content of the question to grasp its meaning and intent. This involves parsing the sentence, identifying key terms, and determining the context in which the question is being asked.

Accessing Pre-existing Knowledge: As an AI language model, I have been trained on a vast amount of text from diverse sources up until September 2021. I access this pre-existing knowledge to find relevant information related to the question.

Retrieval of Relevant Information: I search through my stored knowledge to find information that directly addresses or is related to the question being asked. This can involve retrieving facts, explanations, definitions, examples, or any other relevant information that can help provide a comprehensive answer.

Evaluating and Selecting Information: Once the relevant information is retrieved, I evaluate its reliability, credibility, and relevance. I consider the source of the information, its consistency with other sources, and its suitability for addressing the question effectively.

Synthesizing and Formulating an Answer: Based on the retrieved and evaluated information, I synthesize the knowledge to create a coherent and comprehensive response. This involves organizing and structuring the information in a logical manner, ensuring that the answer provides meaningful and relevant insights to the question.

Generating the Response: I generate the final response by transforming the synthesized information into human-readable text. This includes shaping the response with appropriate language, providing explanations or examples where necessary, and ensuring the response is accurate and understandable.

The similarity of these steps to what we do when we assimilate knowledge can deceive us into thinking that there is some kind of "intelligence" behind going through a human-like process. It's not, because all of the above steps are actually translated into highly complex numerical algorithms that generate answers based on probabilities and "distances" and many other complex parameters that the machine has calculated in training. In fact, steps 1 to 4 are only used to identify a subset of knowledge that is relevant to the question. There is another, more important thing about steps 5 and 6 that is not obvious at first.

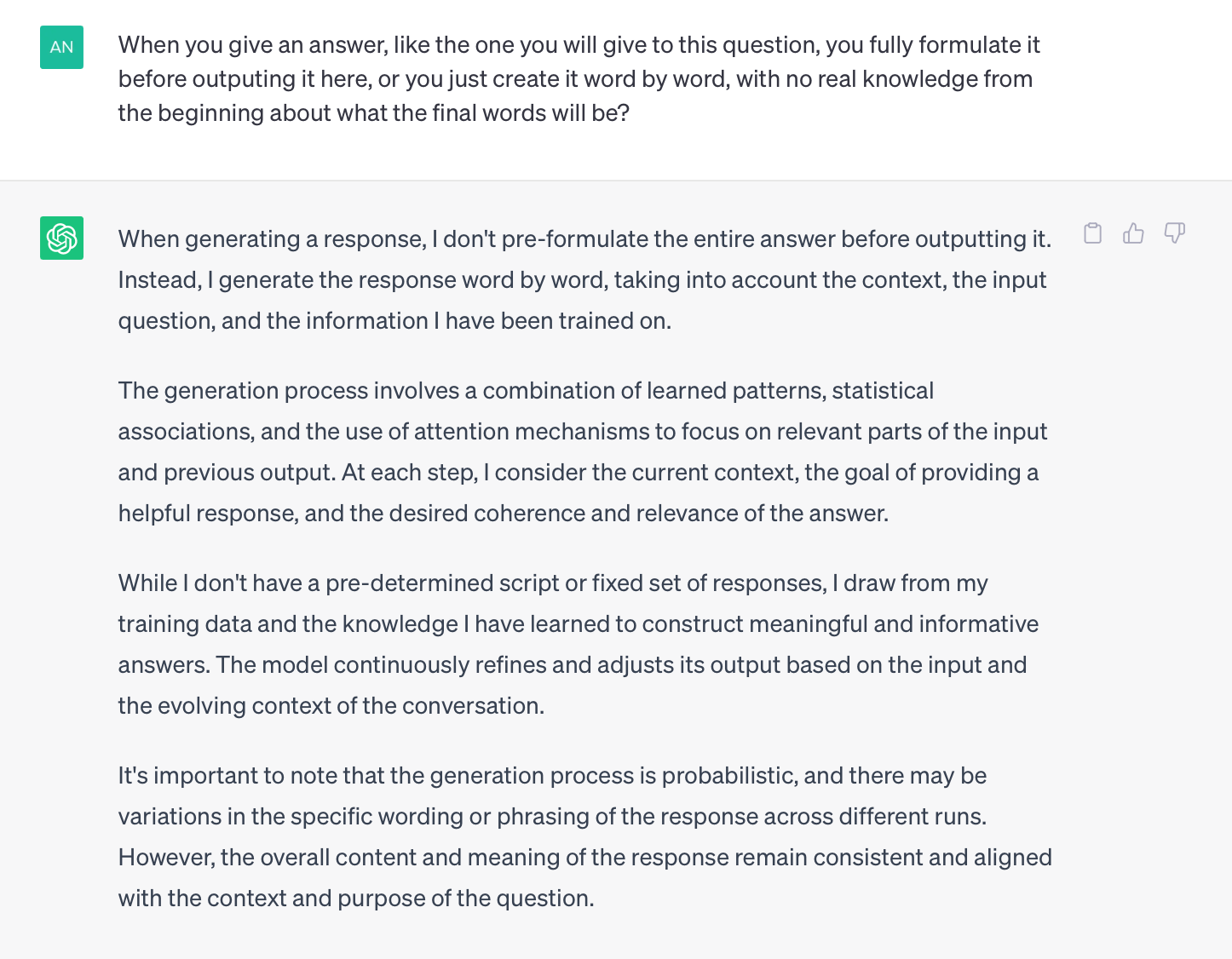

The crucial difference between an LLM and a human answering a question is that an LLM constructs its answer word by word, checking at each step what's the most likely word after the ones already found, in the subset relevant to the question, while the human builds its entire basic structure of the answer before answering. The way chatGPT's interface shows words appearing one after the other is not an "artistic effect", it's exactly how the machine works. The consequence of this is that at any point in a complex answer, the machine really has no way of seeing the whole answer, because it simply doesn't have it yet.

It's never looking for meaning, it's just looking for a complex pattern of probabilities and abstract distances between words, whereas a human first looks for meaning, "designs" it entirely in his mind, and then looks for the exact words needed.14

This is not how our minds work at all, and this is the ultimate argument for why the machine does not understand knowledge as humans.

10. Knowledge talks back to us: knowledge and language, part 3

Stored human knowledge (in books or on the Internet) is like a frozen instance of the dynamic description of reality, but once it's in our minds, it changes and evolves, it becomes "alive" simply by the way all propositions about something interact in our minds, but also among each of the minds of people in a conversation. In a discussion about cats among a number of people, we may quickly come to the point that cats are not overlords and that this is only a projection we make on them. By interacting, by communicating among ourselves, we establish a hierarchy of "truths" about cats, based on the most popular opinions15. LLMs are able to mimic this interaction through the process described above, using algorithms to generate a measure of "popularity" or "truth" for entire subsets of words selected as a result of a question. An LLM can appear to be a sort of "conversational machine", as if a vast conversation about any topic in the training set was going on inside it, but it only returns its conclusion. However, a key difference from a real human conversation between individuals is that LLM's algorithms only "navigate" between pieces of knowledge on an already built map, without adding or subtracting anything to them, to determine the "most probable answer" to a question.

Truth is a really complex and difficult subject that we don't want to go into here, but it may be difficult to understand how LLM' work their "magic" unless we go back to the conversation a group of people have to determine the truth about a subject. The way they do it is that they "negotiate" among themselves how to establish a collective truth based on everyone's personal knowledge. The end result is not an act of intelligence (although intelligence is involved in the arguments), but of "authority" that reduces itself to "popularity": the final truth is what most people in the group or in the world think is the truth. (This is exactly why disinformation and manipulation work best on a large scale.) The crucial thing is that only authority/popularity matters in the selection, not the intelligence of any of the participants. The way we search for answers on Wikipedia is actually the same - we rely on an external authority to determine the truth, based on the idea that so many people can't be wrong. Our intelligence plays an insignificant part in this.

So the pieces of knowledge "absorbed" by an LLM during training are actively related to each other as soon as we ask it a question, not based on meaning, but on what we might call "clusters of probability". The end result sounds very intelligent, but there is no process behind it that resembles human intelligence. It's the activation of an intrinsic quality of words (tokens) in knowledge to arrange them in the most probable (popular) order in response to a prompt. There is a strong connection between "popularity" and "probability", and this is why LLMs seem so eerily "true".

But LLMs are not intelligent at all! True, they outperform any human mind in scale, being much more capable of dealing with huge training sets, far beyond "all statements about cats", including all human knowledge on the Internet, or entire fields of human knowledge. We're so impressed by the size of this knowledge set, and the speed at which we get "answers", that we fail to notice that there is not one bit of conscience, understanding, or ego in what an LLM does. It's just a new interface that looks for internal connections in our knowledge - without a mind - not looking for the truth, but for the most popular mash-up of words for a given topic (prompt).

There is something important to note here: an LLM cannot improve any knowledge, it will only play within its training set. It can find new connections and stimulate us, but it cannot create new knowledge. The question is, where does new knowledge come from? If you look at scientific theories, there is a clear answer: intuition. It "comes" to the very few people who are obsessed with solving problems and sometimes literally dream the solution. All new valuable knowledge that we "discover" does not come from experience (that's where the problems come from), but from intuition. There is a long list of remarkable scientific revolutions based on the most irrational (at first) ideas revealed in dreams or images. We can start with the most famous, Einstein, who declared on several occasions that intuition and imagination are the key to new ideas. Or Kekule, who invented organic chemistry after dreaming of an Ouroboros (a snake eating its own tail). We can also mention Jung, who wrote almost all of his books as a result of a year of "active imagination" around the age of 40. The list is astonishingly long. In fact, the source of all new, true knowledge is transcendent - and this is actually the only solution to the problem Plato first saw: how can we know something without knowing it before? We can say it, even if it's not popular these days: all knowledge comes from God. Or "inspiration" - somewhere above our heads, for the agnostics. But certainly new knowledge is not the result of intelligence. Intelligence only manifests itself at the interface between knowledge and will.

I am quite sure that in the near future a new theory will emerge to better explain the high relevance of LLMs' output, and this will be truly revolutionary because it will mean revealing hidden structures of our language that LLMs use today without us (or anyone, really) knowing it.

For now, we have this: LLMs are the best interface to the "Human Library of All Knowledge", a great tool (even if in many ways it resembles a black box) that helps us learn and understand by finding knowledge, much more effectively than any machine before. But it's just an interface. We're afraid because they look like formidable competitors: they seem to "know" everything and can talk like us. But there is nothing human about them, no conscience, no will. It is just knowledge (our collective knowledge) responding to our demands (and this applies to Midjourney and other non-textual AIs as well). It's not a genie escaping from the bottle, it's just our collective knowledge shaping itself around our queries, almost instantly, on demand. It's not "someone" hidden in the code shaping the knowledge to our queries, it's the knowledge shaping itself to our queries. It's knowledge talking back to you.

The biggest difficulty in understanding this "self-shaping" concept of knowledge is that there is nothing like it in the physical world except for another human talking back to you when you ask something, "shaping" knowledge in his head in real time to answer your question. So we're quick to confuse an LLM with another person.

But the key point here is that this "self-shaping" knowledge that an LLM provides access to, has no ego and no conscience; and therefore no will. It's just knowledge, our own knowledge in a very different form.

11. Metamorphic Knowledge

"Metamorphic" is mostly used today with a rather narrow meaning, referring to rocks and geology, but its basic meaning is closer to "capable of transforming itself into a variety of forms by metamorphosis". It's a good word to define what knowledge suddenly appears to be through an LLM language interface: an always dynamic content, constantly shaping itself to meet your questions. It's not "someone" behind answering questions, it's simply the knowledge that shapes itself through a new kind of interface.

We haven't seen this before for two reasons. First, we haven't had the right tool to see such a vast amount of knowledge as a whole. You have to be able to go into space to really see the whole Earth. From space, it's suddenly much easier to see the atmosphere as a whole, with all its dynamics, than it is for the grounded observer to look at just one storm. By seeing the whole, we can quickly see that there are some new properties of the whole that cannot be found in its parts.

The sheer volume of human knowledge is difficult to imagine. Try to realize what percentage of the total human knowledge you have in your mind right now. Or, better yet, try to realize what percentage of all human knowledge all living human beings have in their minds right now. There is far more knowledge out there than exists in our minds at this moment. It's true that all knowledge was once the product of a human mind. But it separated from that mind, evolved in the minds of others, and then was stored somewhere, sometimes for millennia, before resurfacing and changing other minds and evolving again. For example, some of the Greek philosophy had blackouts of centuries in human culture, only to resurface and change our entire civilization. Or take Newton's "Philosophiae Naturalis Principia Mathematica", read it and be amazed at how different it was for Newton to get the original knowledge of what we now take as a simple truth: F=m*a. But this "simple truth" really changed a lot to get the form it has today, and like most truths, it will continue to evolve and change. In reality, there is far more knowledge stored out there than there is in all human minds at any given moment, and all of that knowledge is constantly evolving with every human interaction. We haven't really been able to see it as a whole until now.

Our interaction with knowledge has always been discrete and on very small pieces of the total human knowledge, because each individual's ability to absorb knowledge is severely limited compared to the amount of knowledge out there. We're like the blind man in the elephant story, but we don't touch the trunk or the ear and try to guess what the whole thing is, we've been looking at skin cells and haven't even had a chance to imagine the elephant. What AI and especially LLM are changing is that for the first time they are able to create a kind of image of the whole elephant. And this is really a different kind of animal than anything we have seen before.

The second reason we haven't seen knowledge in its metamorphic state is that it has literally been fragmented into billions of little pieces while it was stored in books, pictures, databases, all kinds of records, and people's minds, each piece separated from the others, looking inert. These pieces of knowledge only interacted with each other in people's minds, and mostly on rather narrow topics (like the branches of different sciences). Most of the time they were just inert pieces of content waiting for a mind. We can call this an "asynchronous" method of interacting with knowledge. Each of us individually, one piece at a time, retrieves pieces of knowledge, which we then put together in our minds, seemingly without changing the original source.

What an LLM does is that it literally merges all the knowledge in its training set into something new, a kind of "map" for all the pieces of knowledge that have some kind of relationship/link to each other. It really puts all these independent pieces together, finds links16 and thus creates a whole with new properties relative to its parts. It is really important to see that this “map” is completely built in training, and it has its almost final shape when you ask it a question. What the training process has done is to build a completely new representation of the knowledge in the training set, in an abstract space, one that it already has and uses to navigate when you ask it a question. It's like a real map you use to quickly find your way to something when you need to. Everything is already in there, you don't draw the map when you need to use it - you just follow its internal connections. In the same way, a LLM “map” is in its memory, after training, with all the knowledge stored in a new form, with its intrinsic "distances" among words and other thousands of parameters already calculated17. It’s literally knowledge in a new form. When answering, a LLM literally follows a “road” on this “map”, one point (word) at a time.

This is one of the most fundamental processes in nature: the whole is more than its parts. Any kind of interaction between parts creates new qualities that transcend the individual parts. Molecules are more than the sum of their atoms. Cities are more than the sum of their people. Software is more than the sum of its parts. And perhaps we will discover that knowledge as a whole, taken together, is more than its specific pieces. And the way we interact with it is something completely new, a synchronous method by which we interact with all knowledge at once, using something like chatGPT, an interface that connects to the whole, not the parts.

For man, "knowledge" was and still is a kind of "library" to be consulted when one needs to understand something new. It's a passive vision: knowledge is inert, "trapped" in books or databases or other people's minds, and only comes alive in our minds when we use it. The main idea of this essay is that we're wrong: knowledge is dynamic, changing by its own rules, but also by interaction with us. It's shaped by us, but it also shapes us. It is both our child and our parent. Humanity is a kind of simbiot formed by the human population and its knowledge, a womb in which we grow. We are both the product and the source of this vast knowledge, and whatever our hereditary abilities, without it we become savages, losing most of what defines us as human. The way we have interacted with it until now- each of us individually, a small piece at a time, i.e. asynchronously - has made us think that knowledge is a simple product of our intellectual activity. We thought we had knowledge, when in fact knowledge had us.

But this metamorphic knowledge is not a "person". It has no conscience or ego. It's not even intelligent, because it simply changes its form according to our wishes, it doesn't "want" anything. There is no will, no knowing, and no ego in it. It's just knowledge. Metamorphic knowledge.

12. Knowing everything, understanding nothing.

Seeing our own human knowledge in its metamorphic form is so fascinating that we mistakenly call its interface "intelligent" while almost forgetting why real human intelligence is still essential to our progress. Simply put, the "digestion" of knowledge is something a machine can never do for us. A concrete example may help you grasp the true meaning of this apparent truism.

Let's say you want to understand black holes and you go to Quora. Among the hundreds of threads on the topic, there is one with more than 400 replies titled "What is a black hole? How can we understand it?" You only have to read a few of them to see a new problem with Internet knowledge if you have some training in physics: a highly abstract mathematical problem that defies ordinary imagination is "explained" in simple pictures and everyday observations and language. In fact, it's really hard to understand a black hole, because the number of questions and the complexity of the mathematical tools you need to deal with them increase exponentially as you study them - which is why those who really understand things have the impression that they understand less and less. If you read the "black hole" thread above, you may think you understand it (i.e. explain it in terms of your pre-existing knowledge), but in fact you don't. The test is simple, you cannot use your "understanding" to explain the observed behavior of black holes, i.e. you have no practical use for your imaginary "knowledge" (except maybe to impress other people you know about complicated things you don't really understand). If you ask the same question on ChatGPT, you will get an even more schematic and low-level answer, perhaps because it has been trained on low-quality material on these subjects.

Things are even worse with another popular science darling, the Big Bang. How can you explain in layman's terms that time and space were created in the so-called "explosion"? You can't. Yet there are many of us who do just that, creating a false image of something unimaginable just to give others the illusion of understanding. This is our age, we know a lot, we understand almost nothing. This is mainly because information overwhelms knowledge.

One of the most striking examples of taking what we know for what we understand is what we call "news". Forgetting that it is impossible for anyone to formulate impartial news, we usually take partial (if not fabricated) information from the news and automatically draw conclusions (what we call "understanding") based on other people's opinions (presented as "impartial news"18). For example, we know from the news that Russia has invaded Ukraine and is bombing cities and civilians inside Ukraine, killing and destroying everything in its path. And it is only on the basis of this news that we feel we understand what is happening there, while in reality we don't understand much about the origins and the history of the conflict without investing time and effort into it to study it, and we certainly have no idea of the true reality on the ground. We have no real understanding, just some information. There is a common idea that people who know things are smart, so we want to know things to be seen as smart. (remember ”Who wants to be a millionaire”?) We usually fail to grasp this simple thing about intelligence: knowing is not understanding. To make the best use of existing knowledge for a specific purpose, you don't just need the right knowledge, you need to understand it. AI can do a lot to help us find and prepare knowledge for our tasks, but it can't help you understand that knowledge before you can use it to solve your problem. They can't learn for us, and for that reason alone, they can't create anything truly new in our place. Remember, you don't really understand black holes by reading answers on Quora, and you certainly don't understand them by reading chatGPT's answers to your questions. You understand them after years of serious study, building layers of knowledge upon which understanding becomes possible. Years that chatGPT can shorten, but never reduce to days.

They seem so frightening precisely because we take LLM’s vast "knowledge on demand" as a kind of understanding. The idea that knowledge is understanding is so ingrained in our minds that we believe that if it "knows" things, it must understand them! In reality, there is no understanding in any LLM, there is no one there who understands anything.

There is no "knower" who knows anything, it's just knowledge shaping itself to your demands.

13 The All Knowing Father

AI itself will transform our economy by creating truly powerful robots of all kinds - some of which are already here19. AI applications are already game changers in a wide range of fields:

Natural Language Processing (NLP): NLP applications include machine translation, sentiment analysis, chatbots, question answering systems, text summarization, and language generation.

Computer Vision: Computer vision applications involve image classification, object detection, image segmentation, facial recognition, video analysis, autonomous vehicles, and augmented reality.

Speech Recognition and Natural Language Understanding (NLU): These applications include speech-to-text conversion, voice assistants, voice command recognition, voice biometrics, and sentiment analysis in spoken language.

Autonomous Systems: Autonomous systems, such as self-driving cars, drones, and robotics, utilize AI algorithms to perceive and interact with their environment to perform tasks without human intervention.

Healthcare and Medicine: AI is used for medical diagnosis, disease prediction, drug discovery, radiology image analysis, personalized medicine, and patient monitoring.

Financial Services: AI applications in finance include fraud detection, algorithmic trading, risk assessment, credit scoring, customer service chatbots, and personalized financial recommendations.

Generative Content Applications: These applications involve the generation of content by AI, including text generation, image synthesis, music composition, artistic style transfer, and virtual reality content generation.

Cybersecurity: AI is employed for threat detection, anomaly detection, malware detection, user authentication, network security, and fraud prevention.

These are just a few examples, but the impact of the LLM in particular is more transformative, not because machines are going to think for us (because they really don't, they calculate for us, which is much less than thinking), but because we suddenly have easier and faster access to all human knowledge. What is really happening, and what we do not yet realize, is that we are transforming passive knowledge (dusty books in libraries and huge databases on the Internet) into active, metamorphic knowledge that shapes itself to answer our questions. This will dramatically change the way we learn and absorb knowledge. We will become more productive and creative because our energy will be spent less on digging for knowledge and making sense of it, and more on thinking and creating. But what LLMs do is not thinking, it's something completely new, a new way of accessing knowledge in its metamorphic form. You don't ask someone to retrieve knowledge from their memory or books, think to formulate their answer, and then come back to deliver it to you. You ask knowledge itself. It's not the machines that we bring to life, but our own collective knowledge. And perhaps this is how knowledge should always be: fluid, alive, ready to shape itself around a question. What will really change from the ground up is the way we use knowledge. But on the way lie unexpected dangers.

For many of us, chatGPT suddenly looks like a virtual teacher who can teach us everything: The All-Knowing Teacher. The need to personalize this "talking thing", to connect it to a "person" is overwhelming. But LLMs can only play part of the teacher's role, first by simplifying the ingestion step ("cooking" ready and nutritious "meals" of knowledge for us), and then by engaging us in a relevant conversation to fine-tune the content brought up in response to your questions (a bit like seasoning and adjusting the dish to our specific tastes and needs). But they completely fail at a crucial task a teacher has: helping you understand. Most people who study the subject don't realize that the output of LLMs is just our own collective knowledge, distilled for us in what may be its best form (given the input), already abstracted, formulated to our liking, integrated, and properly referenced. It's living, adaptive, metamorphic knowledge, but with no understanding behind it. Remember that answers come out of an LLM word by word, based on complex probabilities and "maps" of knowledge, without anyone knowing the final answer from the beginning.

Since the knowledge in a large and public web-based training set can contain a lot of wrong and stupid things, it's only natural that all LLMs will also give wrong and stupid answers. Their job is only to be an effective interface, not to check whether the content they serve makes sense.

This is very clear in the many cases where it gives us wrong answers, in a certain and definitive voice, only to backtrack too easily when the error is pointed out. An LLM just shapes existing knowledge to our needs, and that existing knowledge may contain a lot of bullshit20. With no conscience and no prior understanding of the subject, an LLM can never become a real teacher, one who knows how to distinguish true knowledge from false knowledge. But the way he masters the language can create the illusion that he does. The real danger is not in the tool, but in ourselves; specifically in the collective projection we're in the process of making on AI, specifically on LLMs: " The All-Knowing Teacher." Even if it's not called that yet, that's mostly how most people feel about it. A teacher or even sort of an Oracle.

But it's more than that. We already invest AI with a kind of superhuman, mythological power, one of deep religious extraction. We remember that "rabi" means "teacher," and that is how most of the apostles addressed Jesus before the resurrection. Jesus teaches, and he is certainly in possession of a kind of total knowledge. The " All-Knowing Teacher" is actually a " Wise Man" archetype and ultimately a God archetype, the " All-Knowing Father". And we can see it clearly, there is already a collective projection in the making, one in which the AI takes on the dimensions of a god. Sometimes as a "father" who provides for his children (the way technology infantilizes us is a serious point of discussion here), heals them and takes away their pain and work, even promising immortality in the cloud. But also as a vengeful God, like the God of the Old Testament, ready to wipe us out completely as unfortunate mistakes of creation. If HAL wasn't enough to prove the reality of this projection, isn't the rather absurd letter for a 6-month moratorium on AI research proof enough that this projection is happening?

There couldn't be a worse time for such a collective projection, because we are already living in a world where the religious vacuum is being filled by a new scientific gnosis - one that has replaced God with science. We already have all the signs that Scientism21 is the religion of our time, with its own church (science), clergy (the man of science), and inquisition (media control). We had a good taste of what this new religion is during COVID, when it became quite clear that it is not science, but blind faith in a mythical scientific superpower that drives it. There is a similar point to be made about the lack of scientific method in the Church of Climate Apocalypse, but that is beyond our scope here.

Until now, Scientism didn't really have a god, there was no face to worship, only abstract things (like the common good, progress, power). But with the advent of LLMs and other generative content AIs, because of their ability to mimic a real person, it's easier than ever to give the new God of Scientism a face. Even to make him a "person", The All-Knowing Father, under whom all the sects of Scientism will unite. And that would truly be a new Dark Age of humankind - humanity worshiping a machine without ego, will or soul, for the benefit of some new elites. If this sounds exaggerated, just look at the way many serious people write about AI today: it's like a sleeping demon capable of ending the world if awakened. If we're so afraid of it now, imagine how we'll look at it in a few years, and what freedoms we'll give up in the name of keeping the demon asleep.

It helps to say a few words about what a projection is for those who are unfamiliar with the term. The best place to start is with Jung and his definition at the end of "Psychological Types", his seminal work:

”Projection signifies the transferring of a subjective process into an object. It is the opposite of introjection (q.v.). Accordingly, projection is a process of dissimilation wherein a subjective content is estranged from the subject and, in a sense, incorporated in the object. There are painful, incompatible contents of which the subject unburdens himself by projection, just as there are also positive values which for some reason are uncongenial to the subject; as, for instance, the consequences of self-depreciation.”22

We really must withdraw this dangerous projection as soon as possible, and realize that it's we who are putting anthropological qualities into AI, very much like the primitive people for whom "la participation mistique"23 made them believe that rocks and trees were talking, when it was only their subconscious that was talking. We need to understand that, like the primitive man, it's our own unconscious heavy shadow we project on the machines. What we truly need is to embrace this gift of a new interface to the whole human knowledge and stop helping the new elites turn it into a political tool to scare humanity into submission. The only regulation needed is one to make sure the training sets are not ideologically biased and that universal access to them is guaranteed24.

We must stop fearing AI or hope to be saved by AI, because the real dangers to us or the way to our salvation are only to be found in ourselves. The only thing we should fear is us, not the machines.

14. The road ahead

Metamorphic knowledge will completely change the way we interact with all human knowledge, but it is expected to transform our civilisation in a broader way. Two things come to mind. The first is that the horror of ultra-specialisation, caused mainly by the limited amount of knowledge a human mind can absorb, will end. We'll need to understand how the big pieces fit together, not remember the details. Once you can have all the physics in an LLM, you can really look at all the physics at once, ask unimaginable questions like "what is the connection between Plank's constant and photosynthesis?" and get answers and connections that no human has been able to get other than by pure chance. This will also put an end to the wrong way of valuing content over real knowledge, which produces an endless stream of academic papers with no value. The value will again be in thinking and vision, not in the production of words and spreadsheets.

The second and more dramatic change, not to speak of economic disruption, is to be expected in the way we look at what we call 'truth' and 'reality'. There is, for the moment, a quiet movement in cognitive science and philosophy that tends to move beyond the mechanics of positivism that has stifled these fields in recent decades, and that is being driven by the ability to see knowledge as a whole rather than the sum of its parts, and thus to look at human consciousness across a wide range of fields and sciences. Because in the end, what we call 'reality' is just an ever-evolving vast body of knowledge of which we can only grasp bits and pieces, and what we call 'truth' in relation to that reality is just some statistical measure of popularity. We're going to need new definitions. There is another area related to machines that will collide with actual representations of truth and reality, and that is VR. We may soon experience virtual worlds that are indistinguishable from the real world, which will only add to the initial confusion. With our cultural backbone at stake, the transition will be painful. We are already experiencing some symptoms indicating that classical ideas of reality and truth are under siege, with the direct result that knowledge, trust and meaning are being pulverised into a myriad of unconnected atoms, leaving us lost and fearful. The Critical Thinking theories leading to the gender multiplication mania, the demolition of statues and history, the super-productive censorship and manipulation machine of the new oligarchy, are all just symptoms of a deeper movement, one aimed at a new understanding of reality and truth. For now it's just destruction and pain, a kind of collective madness, but it may be that this is inevitable before something new coalesces and reshapes human culture. It may be that at the end of this road we'll rediscover God, once we have a deeper understanding of the beauty that underlies His world. Certainly we'll have a clearer picture of existence as much more than the sum of its parts.

In Romanian, for instance, we do not have ”smart phones”, ”smart TVs” or ”smart vacuum cleaners”, we have "intelligent phones”, ”intelligent TVs” and ”intelligent vacuum cleaners”.